Beware the Sunset Trap: Why Your Legacy Software is a Ticking Time Bomb (and AI is Lighting the Fuse)

Imagine you have a successful ongoing business or administer a public agency. Your day is spent carrying out your mission critical work. You care for people. Your computer systems are mature and have been running for a decade or more. Your original developers have long since moved on and your updates are sporadic and driven by security scanner reports that focus on CVEs and deprecated OS versions. Organizations often treat this software as a capital expenditure with up front development rather than an ongoing maintenance expense.

Suddenly, a critical functionality just stops one day and requires unplanned emergency development.

You see, unbeknownst to you or long forgotten, the web developers hired 2012 back when you were last building your custom web application integrated with a Google Service. And it has been there ever since. Quietly being sent data and returning results. Working day in and day out without incident. But now it stopped. So you have a developer start to look and it turns out Google announced it was deprecated on some obscure blog 2 years ago and the announced date has now come. What do you do? Well, you have to implement whatever changes are needed to reimplement.

I have seen this pattern over and over during my career. Technology moves fast and features are announced as “deprecated” which means while it still works today it will stop one day. When something is deprecated the notice often describes the replacement. For example, “function foo() is deprecated and will be removed in a future release, use foobar() instead.” When you developers regularly update your software they see these build warnings (or runtime) and plan accordingly as part of the maintenance.

Unlike traditional software methods, AI companies change models frequently and without meaningful announcement. OpenAI does maintain a published deprecation list, but they frequently retire model versions (e.g., GPT-3.5-Turbo-0613), forcing developers to migrate to models that might behave differently. This model drift is an extreme source of brittleness for any meaningful integration.

But the real challenge is when something is deprecated without replacement, the so called sunset. This means the functionality is going away and is being replaced with nothing. You’re on your own to come up with another approach. Sometimes another library or another vendor will have a suitable alternative and sometimes they will not. Regardless this is disruptive and it is expensive! Changing anything in a legacy application is prone to introduce small errors and users who are set in their ways often disfavor change and yet this change is inevitable.

Now, deprecated without replacement is not limited to cloud services but is most acute with them because once the vendor turns out the lights it is truly gone. With open source libraries, you have the choice to adopt the library and pay your developers to maintain it independently. And I posit that with AI agents it will be an order of magnitude worse because models and behavior change nearly without warning.

Change is inevitable. With our customers we have seen this many times, a few examples include:

- PDF rendering via WickedPDF: a Ruby language library that allowed applications to render PDF invoices and documents using HTML

- The Google Chart API that rendered nice-and-easy-to-read embedded charts and graphs with simple URLs included in some HTML

- Google Datastore: Shut down while not compatible with its Firebase replacement! The subject of a major case study Case Study: Complex Legacy Google Datastore Conversion to PostgreSQL on AWS

These are not theoretical but real examples that impacted organizations I know. And it is not limited to behind the scenes technology, Google is especially aggressive about killing services with hundreds documented by the Killed by Google website. And this doesn’t even mention the library level stuff I included above.

What can you do to minimize the disruption? First, you and your developers need to understand that every dependency and every API call is a dangerous third party entanglement. It is a risky integration.

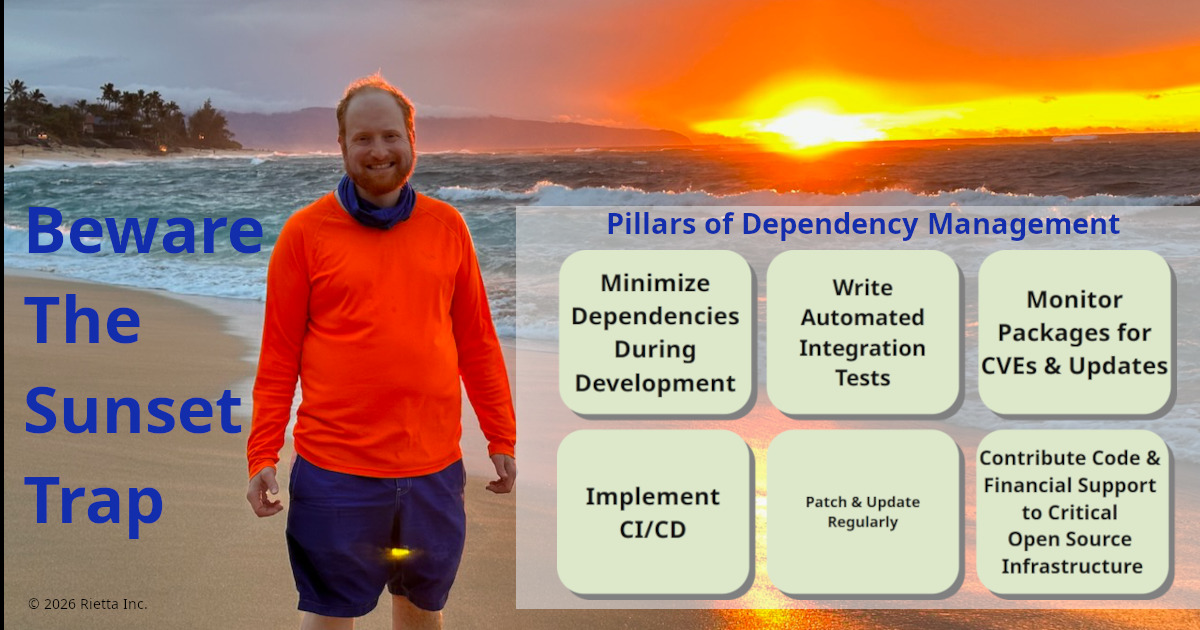

For years, I have taught the ‘Six Pillars of Dependency Management’ in my lectures to help organizations move from reactive patching to proactive resilience:

- Minimize dependencies during development

- Write automated integration tests

- Monitor packages for CVEs and updates

- Implement CI/CD

- Patch and regularly update

- Contribute to critical open source infrastructure

These are the investments you must make for any mission critical line of business software. In short, invest in maintenance!

But I know you want to know more about the AI risk. It is not just about the API going dark; it is about behavioral sunsetting. AI is an unstable, non-deterministic dependency. While a Google Chart URL remains the same for years until it dies, an AI model can ‘drift’ or be replaced by a newer version that interprets your instructions differently, breaking your business logic without a single line of your code changing.

To work with a non-deterministic API, you will need a different approach that uses techniques such as state machines and data validation to deal with this inherently untrustworthy input to your system. I have touched in that in my recent Use AI to Describe Images as a Background Job in Ruby on Rails. Expect more from me on this in the coming months.

The good news is, a disciplined approach to dependency management will serve you very well. But the key lesson is you need to invest in your ongoing costs to avoid disruptive and expensive surprises in the years to come. By maintaining a proactive stance, you can spread your development costs across years rather than that big surprise bill from an outside consultancy you had to bring in on a crisis basis.

I know we much prefer working with clients over the long term and not just serving as the cleanup crew after a major disruption.